Artificial Intelligence (AI) is transforming how organizations recruit, evaluate, and manage employees. From automated résumé screening to predictive analytics in performance reviews, HR departments increasingly rely on algorithms to make faster, data-driven decisions. But with this technological leap comes a new era of ethical, legal, and compliance challenges—especially when AI systems unintentionally discriminate or make flawed decisions.

At Lauth Investigations International, we recognize how these risks can evolve into full-scale corporate investigations, compliance breaches, or EEO disputes. Understanding the pitfalls of algorithmic bias and establishing proactive oversight is essential for every business that values fairness, accountability, and transparency.

Understanding Algorithmic Bias in the Workplace

AI systems learn from historical data. When that data reflects human bias—whether related to gender, race, age, or disability—the system can perpetuate or even amplify those biases. For instance, an AI tool trained on years of hiring data from a male-dominated workforce may favor male applicants over equally qualified women.

A private investigation into such cases often reveals that the bias was not intentional but embedded in the algorithm’s design. Unfortunately, “unintentional” doesn’t absolve an organization from liability. The Equal Employment Opportunity Commission (EEOC) has already issued warnings that algorithmic discrimination may violate federal law, particularly Title VII of the Civil Rights Act. To mitigate these risks, HR leaders must understand not only what their AI tools do—but also how they make decisions.

The Legal and Ethical Implications of AI in HR

Using AI in hiring, promotion, or disciplinary processes without oversight can expose companies to significant legal risks. When an employee alleges unfair treatment or discrimination, the organization may face an internal or external corporate investigation to determine whether bias played a role.

Common risks include:

- Discriminatory outcomes: AI systems can unintentionally exclude protected groups.

- Lack of transparency: “Black box” algorithms make it difficult to trace or justify a decision.

- Data privacy breaches: Sensitive employee data may be mishandled or analyzed beyond consent.

- Inconsistent accountability: When HR relies on AI decisions, it’s unclear who holds responsibility—HR staff, IT teams, or vendors.

The consequences of ignoring these risks can range from financial penalties and reputational harm to mandatory audits or compliance investigations.

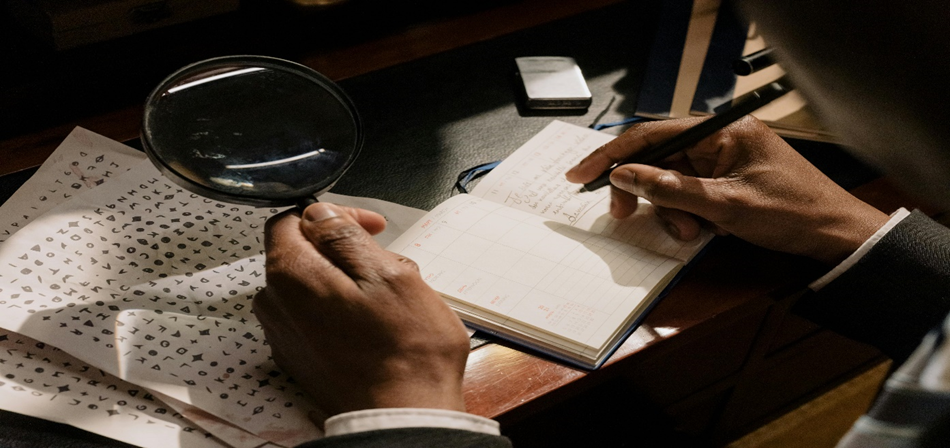

The Role of Corporate and Private Investigators

When an AI-driven HR process leads to complaints or suspected bias, organizations often call in a private investigator to conduct a neutral fact-finding review. At Lauth Investigations International, our team specializes in corporate investigations that involve compliance, discrimination, and ethical misconduct.

Our investigators assess:

- How the AI system was designed, deployed, and monitored

- Whether HR personnel had adequate training on AI tools

- The data sources used to train the model

- Whether adverse impacts align with protected categories under federal or state law

By approaching these cases with both technological understanding and investigative rigor, we help organizations identify the root causes of algorithmic bias and recommend remediation strategies that strengthen compliance frameworks.

Best Practices for Ethical AI in HR

Organizations don’t need to abandon AI—they just need to manage it responsibly. Here are key strategies to reduce bias and prevent the need for reactive investigations:

- Conduct Regular Audits:

Perform internal audits or hire an independent investigator to review AI-based HR tools. Identify patterns of bias or disparities before they escalate. - Enhance Transparency:

Require vendors to explain how algorithms make decisions. Documentation should include training data sources, decision pathways, and accuracy rates across demographic groups. - Human Oversight:

Ensure that all AI-driven decisions are reviewed by qualified HR professionals before final action. Automation should support, not replace, human judgment. - EEO Compliance Training:

Train HR teams to recognize algorithmic bias and understand their legal obligations under EEOC and OSHA frameworks. - Data Governance:

Protect employee data through strict access controls and encryption. Mismanagement of data can trigger both privacy violations and reputational harm.

Aligning AI Governance with Corporate Integrity

A well-governed AI system is not just a compliance tool—it’s a reflection of a company’s integrity. Transparent and fair decision-making fosters employee trust and strengthens the organization’s public image.

When AI ethics are overlooked, however, companies may find themselves under scrutiny—by regulators, stakeholders, or employees. A corporate investigation in such cases often reveals deeper systemic failures, from poor oversight to negligence in algorithm selection. At Lauth Investigations International, our mission is to help organizations detect these issues early and create resilient compliance systems that prevent future misconduct.

Conclusion

As AI continues to shape the modern workplace, businesses must balance innovation with accountability. The future will favor organizations that not only adopt AI but also embrace ethical governance and transparent oversight. If your organization faces concerns about discrimination, bias, or compliance failures related to AI-driven HR processes, our expert private investigators are ready to help.

Contact Lauth Investigations International today to learn more about our corporate investigation and AI & compliance/EEO services at https://lauthinvestigations.com